It’s no secret that I love me some LogMein. The simple, free version is excellent and I really depend on it for connecting to a few machines I have out on the Internet. Now they’ve come out with LogMeIn Express which is a no frills screen sharing tool. I’ve played with it a little this morning and I see huge potential for this tool. It offers shared remote control and the ability to send files between people in the meeting. It’s still in beta at this point but let’s hope they release this tool without a price tag. This will even be useful as a replacement for CrossLoop since it’s download component is all browser based. Nice work LogMeIn! Try not to ruin it with a monthly fee!

Monday, December 14, 2009

Thursday, December 03, 2009

GoogleDNS

I've posted before about how much I like OpenDNS. Well it seems that Google wants some of that business now so in early December they created their own public DNS servers. Here's a nice little writeup on it. Is this good news??! Well, as a consumer I'd say yes. For me more is always better. In the past I always used 4.2.2.1 (which I think is Level 3) for DNS and had great luck with it. I know that it uses IP Anycast so it always pulls from a sever geographically close to you. I'm not sure any of the others do that but I can say that I've seen the best name resolution performance from OpenDNS...even when you use their filtering. So now it seems that their is a host of DNS servers to choose from:

Level3 (4.2.2.1 - 4.2.2.6)

Google (8.8.4.4 & 8.8.8.8)

OpenDNS (208.67.222.222 & 208.67.220.220)

DNSAdvantage (156.154.70.1 & 156.154.71.1)

An added benefit of Google's DNS is that it responds to pings and 8.8.8.8 is an easy address to remember. :)

Here's a nice blog post from the founder of OpenDNS on Google's public DNS announcement.

Monday, November 30, 2009

Blackberry Bold 9700

Well I finally upgraded from my 2007 Samsung BlackJack (which I had hacked to Windows Mobile 5.1) to a Blackberry Bold 9700 and I love it. I’ve been very pleased with the phone quality and the trackpad kicks ass. I was always a bit hesitant with Blackberry devices because of the trackball but the trackpad is nothing if it’s not elegant. The phone has pretty much everything I need.

Friday, November 20, 2009

Windows 7 First Look

I’ve been extremely busy lately with budgeting, reviews and plans for 2010 so I haven’t had as much time to mess with the fun stuff. However, Windows 7 and Office 2007 are in the plans for next years project list. Having been an MCSE since 1995 and having been recertified 3 times as the operating systems change I’ve developed quite a background in Windows. I’ve lived through rollouts of every flavor of Microsoft desktops operating system (except that nasty Vista) by the hundreds. From what I’m reading so far Windows 7 does seem to be a significant upgrade from XP and seems to be well worth the effort. In particular the features that seem most compelling to me are DirectAccess, Problem Steps Recorder, booting from a VHD file, Bitlocker enhancements, integrated Biometric software (thank God…3rd party apps here in XP and Vista have always been painful) and believe it or not….searching in the UI. Searching for files and data has always been weak in Windows (they really need an updatedb & locate equivalent) but from what I’ve seen with search filters Windows 7 looks pretty good. Since they have now removed the Classic interface completely I’ll need to spend some time to find out where they’ve hidden everything. (Damn them.)

We will undoubtedly use MDT 2010 for image development and distribution but I haven’t seen a whole lot of compelling changes in MDT. So far, it looks like they’ve made some work-arounds to the existing pain points but nothing revolutionary. Multicasting will be a nice addition but heck that’s been around for over 10 years in other software distribution products like Ghost anyhow.

I think I’ve decided to move on and get certified as a MCITP: Enterprise Administrator. I don’t believe certifications make you a better engineer, but I do believe the process allows you to take some time to really learn the products and features. This is if you say away from the braindump sites and focus on the learning instead of the testing. I remember back when I got my MCSE 3.51 on NT…It took me 6 months. I read every book I could get my hands on, demo’ed every feature in my lab and worked with the product in my job on a daily basis. That is my plan here as well. The things I learned have stuck with me throughout my IT career and have shaped and molded my ability to understand and consume new technology. I’ll get off my soapbox now…

Stay tuned and I plan to blog about the cool new stuff as I run across it in my studies…

Monday, October 26, 2009

OpenVPN misfire

I spent the weekend testing out OpenVPN-AS and ran into one problem. After an hour or two the connection would die and not restart until I completely exited the software and got back in. Once and awhile I noticed that it would lock out my account. After some mulling around, I figured out that it had something to do with the SecurID authentication. I moved from PAM to RADUIS authentication on Friday in hopes that our users could use their keyfobs and not have to remember a separate username/password combo. Although I got it all working, it seems that there is some kind of reauthentication happening during the session on a frequent basis. I'm guessing there is some kind of a timing issue because everyone once and awhile the attempt fails and the session dies. Moving back to PAM (that's basically Linux authentication against the local databasse) seems to have resolved the issue. Time to get WireShark out and see what's happening. Stay tuned...

Tuesday, October 20, 2009

OpenVPN-AS

Today I set up our first OpenVPN-AS server and man is it cool. A lot of the things I didn’t like about regular OpenVPN (managing certificates, difficult authentication mechanisms, command line management, etc.) are addressed in OpenVPN-AS. You couldn’t ask for better licensing either….$5 per concurrent connection. That’s a software model I can buy into!

First I set up a CentOS server. It’s ver 5.3 with minimal stuff loaded. The I downloaded and ran the rpm right from OpenVPN.net. After a few small configs in pfSense to port forward https over the box I was up and running. I even got RADIUS authentication working of my SecurID box. For testing I just registered for the free 2 user license but I plan on purchasing more after our pilot is complete. If you want VPN for your business the cost is way worth the effort on this package. The difference between configuring OpenVPN and OpenVPN-AS is huge. OpenVPN-AS is way easier to set up and deal with both as an administrator and a user. Now…if they could only include OpenVPN-AS as a package in pfSense…..

Wednesday, October 14, 2009

Super Video conversion

Every once and awhile you hit a cool tool that you’ve seen before but forgotten completely. I ran into Super today while researching video conversion tools and forgot about how useful this tool is. Super is a video conversion program that will let you re-encode video files from one format to another. I even like it better then…choke…sniff…VLC…for some conversions even though it’s not Open Source. :) I ran into this tool a few years ago and it got me out of a tight spot and it’s even better now. What I really like is it’s simplicity. VLC tends to force you to learn all about audio and video codecs if you want to get power out of the tool. Super allows you to pick an “output container” like mpg, wmv, etc. and if does all the hard work picking out the settings for you. It’s great for the video-challenged peeps like me. Enjoy!

Friday, October 09, 2009

pfsense DNS Forwarding and Overrides

I ran into a small DNS issue when I first rolled out our pfsense firewall. I had 4 active interfaces: inside, outside, dmz and wireless. On the PIX I had the wireless segment go directly to the Internet for name resolution. Requests for “inside” services (on the inside or dmz interfaces) were NAT’ed so that the outside public addresses worked correctly. Not wanting to mess with all that NATing again, I was stuck because the rules I wrote were based on private ip addresses which wouldn’t be resolved correctly by a public DNS server. So after messing around a little I found that when set up as a DNS forwarder, the pfsense box will allow you override specific DNS entries or even an entire domain. Very very cool. I simply added the names I wanted to resolve to the override list with their internal ip addresses and bang! The only requirement was DNS forwarding had to be enabled and the pfsense box was acting as the DHCP server on the wireless interface. Simply leave the DNS values empty and pfsense will advertise itself as the DNS server to DHCP clients.

pfsense rocks the house!

Monday, October 05, 2009

Another successful pfsense rollout

This morning, I rolled out pfsense at our biggest site. It was actually the third try, but that’s not pfsense’s fault. A combination of a bad hub and an extremely long arp timeout period on the ISP’s switch scrubbed the first two attempts. (It was really scary to hear the ISP tech say “Sure I can clear the arp cache for you…can you tell me what to type?” Egads!) All in all it went well. One thing I forgot about was access to remote subnets on our WAN. I purposely left them out of the routing table on the firewall thinking I’d use IPSEC tunnels as a failover mechanism to our WAN. Unfortunately, I completely forgot that they get to the DMZ from the inside to see our website. A few quick static routes fixed that in short order.

My biggest surprise was the fact that I disabled bogon login detection on a previous attempt at getting things running so I had to turn that back on. Turns out that doing that will reset the state table and break everyone’s existing connections! Luckily I found that early during the scheduled downtime so no one was the wiser.

Right now I’m backing up the config as I make changes so I need to automate the backup on a scheduled basis like I did with the PIX. I’m sure I can work something out with Cattools for this.

All in all a successful venture. Two more sites to go and all our firewalls will be pfsense!

Tuesday, September 22, 2009

Windows XP Remote Command Line Backup

I had the need today to connect out to a PC and run a backup on it. Turns out this is trivial to do even with the standard windows NTBackup program. From another machine logged in with admin rights to the target machine run the following command:

c:>ntbackup backup \\remotePCnamehere\c$ /j “PC backup name here” /f “c:\backup.bkf”

This will backup the C: drive on the pc named remotePCnamehere locally to your C: in a file called backup.bkf on the box running the command. I can’t believe I haven’t had need for this more often.

Enjoy!

Monday, September 14, 2009

Friday, September 04, 2009

Data Destruction Fun

We often have the need to destroy data on old equipment including hard drives, floppy drives, backup tapes, CD’s, etc. This has always been painful for me because we used to rely on our recycling vendor to assure us that data was being disposed of properly...and I don’t trust anyone. :) For hard drives I like Darik’s Boot-n-Nuke, but it’s a pain to use on loads of removable media. So I went out and bought an Erase-o-Matic 3.

Enjoy the destructive goodness!!!

Friday, August 28, 2009

Technology Conferences

I just returned today from ILTA's 2009 conference in Washington D.C. The conference is always a great opportunity to network and find Legal Technology specific information. This was my fifth year, and although attendance was impacted by the economy the education and networking didn't suffer a bit.

So what take away's did I walk away with? Well there were many. Email management still seems to be a painful topic for most law firms. Time and time again I heard from firms struggling with these issues:

- Lack of firm email retention polices. Conflicting and rapidly changing requirements result in firms talking but doing nothing. Save everything forever ends up being the standard.

- Lack of tools to satisfy the needs of complex policies that require different retention/archival times based upon areas of law, groups of attorneys or specific industry requirements.

- Lack of scalable archival tools. There are some on the market but things like archival indexes and two tiered storage options leave firms wanting more.

- Huge software maintenance costs and low value. Say no more...

- Slow Outlook performance due to "Save everything forever"

- Issues upgrading Exchange because of compatibility issues with plug-in/s for meta data removal, virus scanning, dms integration, encryption, unified messaging, etc....

- Records management as it pertains to email. Even simple point and click tools are too much of a pain for an attorney if he/she can't do it from their berry.

Surprisingly, spam and antivirus weren't huge issues as they once were. Thanks to the development of cloud and appliance based tools you can actually buy your way out of these problems today.

Lots of talk about SharePoint and other portals. Many firms have committed to SharePoint and they pay quite a pretty penny to keep it running and keep specialists on board. Others are frustrated by it's limitations and have developed ways "around" SharePoint. Personally I don't like either answer. Our extranet provider has turned out to be a utter disappointment in regard to flexibility and performance so I'll need to work on this a bit.

Social Networking also was a new topic picking up interest....both in what to do about it and and to use it to your advantage. Although I didn't sit in all the sessions, I didn't hear specific answers to these questions but it was great to toss around the topic with others.

E-discovery raised it's ugly head again (sorry that's just how I feel abouit it) but not too much new in this arena. I'm sure the e-discovery vendors would tell you otherwise but it seemed to me like they are still catching up on features that people need and are still "behind" the curve. Hopefully in the next year or two they will move from reactive to preventative. Isn't it interesting that they spend all their time worry about old data and they don't submit tips on how to keep corporations out of trouble? They already know what not to do but they don't communicate that effectively. It would be a whole new market for them if they looked at what their clients wanted. There was a great reference of this by a speaker one morning showing a picture of a drill. When the CEO of the company asked his employees what it was they said it was what they made... they said they were the world's best power tool supplier (or something like that). Then he had a slide with a picture of a hole in a wall. The CEO told his company that this is what people actually wanted...not the drill. A very insightful observation...

There were many more topics covered and I'll try and write a second edition of this post after I review my notes more. This is just a summary of the topics I found most compelling.

Tuesday, August 18, 2009

ShoreTel Firmware Upgrade and Lessons Learned

So we did an upgrade on our ShoreTel server, switches and phones a few weeks ago and had one small issue. Some (most) of the phones were reporting a “Firmware Version Mismatch” when viewed through ShoreWare Director’s IP Phone Maintenance screen. (To get to this screen go to QuickLook, then pick your site, then pick your switch….about 1/2 way down the screen you see the link to “IP Phones Maintenance”.) The problem ended up being that the DHCP options on the VOIP vlan (192.168.19.x/24) didn’t include the option for the ftp server on the ShoreWare server.

One other cool thing I learned is that you can telnet into a ShoreTel phone and look at it’s options. To do this you need to use a tool called “phonectl” which is part of the ShoreTel server. Here is how you do it:

From the Shoretel Server directory run the following commands:

1. phonectl -pw <phone password> -telneton <ip address of phone>

2. telnet <ip address of phone>

3. Now, to see the config run “printSysInfo” from the telnet prompt.

Cool.

Wednesday, August 12, 2009

Microsoft Word is Toast

http://blog.seattlepi.com/microsoft/archives/176223.asp

Long Live Open Office!!!

I’m saying this in jest…I’m sure Microsoft will buy find their way around this mess and Word will be just fine. It still makes me chuckle.

Monday, August 10, 2009

Microsoft Licensing Rant

<Begin Rant>

It’s always nice when you pay a vendor top dollar for product and then have them slap you in the face with their licensing restrictions and complicated activation processes. I fondly remember my first run-in with this nonsense…the dongle. As in “the dongle that broke Autocad’s back.” (For those of you that remember, way back when… Autocad required a dongle. Their sales dropped significantly as users shied away from their systems to use alternatives that weren’t hobbled with software protection.)

I’ve been reviewing the changes in Microsoft’s Volume Licensing procedures and the whole process still strikes me as a sign that Microsoft has lost as much confidence in their customers as their customers have lost in them. Don’t get me wrong, I believe that for commercial software vendors to work and make a buck they should be paid. However, hamstringing customers that are actually paying the bill is like a waiter spitting in your food as he hands it to you because you “might” run out before you pay the check.

So it turns out that very little has changed with Microsoft licensing. They still force you to use KMS (and have your pc check in every 180 days) or MAK (and burn a license that can never be recovered should you ever have need to rebuild the box from the ground up).

They give these examples of why this is process is “good” for you:

- It reduces the risk of running counterfeit software. Um…s’cuse me but if I paid for it I did my part. It’s Microsoft’s burden to hunt down counterfeit outfits not mine. What they are really saying is it makes Microsoft’s job easier by making it harder (not impossible) for counterfeit software to exist. Again…why am I paying for this, why do I have to do all this extra work setting up KMS servers and why is this my concern?

- It assures that your copy of Windows is genuine. Once again…why do I care if I’ve already paid for it? What they are really trying to say is that we don’t trust you. To top that off if you aren’t running genuine software you don’t get to talk to Abu in India if you run into any problems with our software. But…if you can prove to us that you actually paid for the software we will provide support to you by an untrained technician from another country in another time zone who talks with a thick accent and is sure to walk you through at least one reboot before kicking the call up to his buddy that had the 2 week training course. Thanks. That makes me feel better.

- Activation = Greater piece of mind. Um… No it doesn’t. The ability to support my users around the world with software that’s not gonna nag them or spontaneously combust into a flurry of license warnings and reduced functionality= greater piece of mind. Wait..that’s not fair. They got rid of the reduced functionality part. Sorry.

- Assists with license compliance. Really? For licensing compliance I have to run multiple reports and then I have to compare what I bought to what the reports say. Then I have to verify that my payments were for the right products and made on time. Then I have to verify that my KMS server or MAK proxy has a clear line of sight to Microsoft at all times so the whole kit and kaboodle doesn’t tip over while I’m worried actually getting non-licensing related work done. How is that assisting me again?

The product key way of doing licensing was bad, but these approaches are worse. They are harder to administer, more likely to leave users unable to work and a huge slap in the face of paying customers.

On a final note, I bet that the restrictions on licensing in Windows 7 (and Windows Server 2008 R2 for that matter) will limit the amount of counterfeit installations. I bet it also will help to sway businesses (especially ones that couldn’t afford Microsoft software anyhow) over to Open Source software solutions. This has and will continue to be detrimental to the sales of Microsoft products. That my friends is Technology karma at it’s best.

</End Rant>

Thursday, August 06, 2009

Port Listener for firewall testing

I found this cool little tool today for testing ports open through a firewall. In my lab, I’m trying to simulate my pfsense firewall and it’s a pain to setup a box as a mail server, an http server, an ftp server, an https server, etc… just so that I can test each rule. This little utility runs and lets you pick a port to “listen” on. Then to test, say port 25, just run a “telnet 10.1.1.1 25” and you’ll get a “Hello” response if the port is active. Simple, effective and elegant tool to add to your toolbox.

Wednesday, August 05, 2009

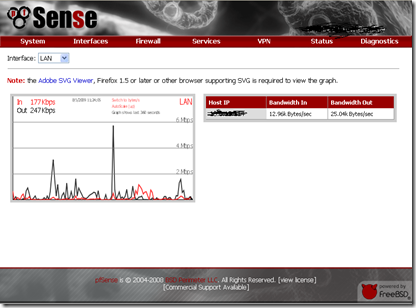

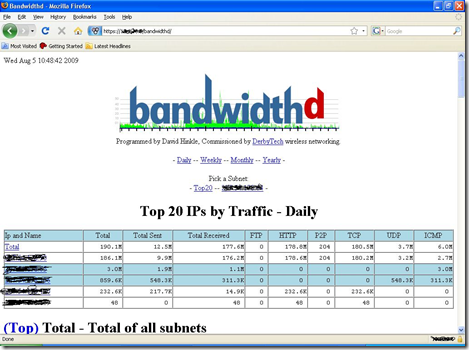

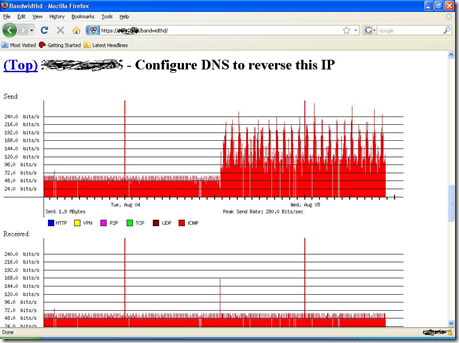

pfsense Monitoring (rate vs. darkstat vs. bandwidthd)

So now that we have our first pfsense box up and running, I’ve been comparing and contrasting what options I have as far as monitoring goes. I’ve loaded rate and darkstat on one box and bandwidthd on another.

I’ve had a lot of trouble with rate. It installs ok, but it seems temperamental in regards to browser. (Firefox seems to work way better then IE here.) This may be due to the requirement for the Adobe SVG viewing plugin, but I can’t really tell. Unlike the other two tools that add themselves as new option to the menus, rate plugs into the built in Status –> Traffic Graph item. When it’s working it’s ok, but the numbers seem to change so fast that it’s not as useful as the other tools which are more focused on long term trending. As Adobe has discontinued support for the Adobe SVG viewer I’d probably lean away from this tool anyhow.

darkstat is nice, but as it runs on port 666 it’s generally something I only open up from the inside interface. That limits it a bit for me as I do a fair amount of remote monitoring. However, it has a “hosts” page which breaks down traffic by IP which is very useful. You can even sort by traffic in, traffic out and total traffic. You can find it under diagnostics, darkstat.

bandwithd is probably my favorite of these tools. It’s nests itself under the https port so you can use it remotely. It drops your top 20 IP’s in a list for easy inspection and it breaks the traffic down into individual graphs for a variety of services.

As you can see there are quite a few options to slice data in pfsense. The built in Status –> RRD Graphs are also excellent for long term trending. pfsense has proven to provide more capabilities in regard to traffic monitoring and collection then I had with my old PIX.

Happy firewalling!

Thursday, July 30, 2009

TrueCrypt Hacked at Blackhat Conference

If you’ve been following the news today, some 18 year old genius has supposedly hacked TrueCrypt. After reading how has hack works, I’m not all that concerned. The attack runs as a shim between the OS and the TrueCrypt interrupt request. To get that installed on a box you need either physical access or admin rights on the machine…both of these are needed while the machine is running. Um..sk’use me… but if you give someone admin rights or physical access to your PC while it’s running THEY OWN YOUR BOX ANYHOW!!!!! Come on guys this isn’t an attack!?!? It’s somewhat concerning that this code is out there but it seems to me that simple precautions like antivirus, malware protection, XP’s firewall, etc.. all severely limit how effective this attack would be in the real world.

On to some real news….did you see that the project manager for CentOS is MIA? That concerns me more then this hack…

Monday, July 27, 2009

Take your Linux Server’s Temperature

If you are running server class hardware, you can run the following command to tell the temperature of your CPU:

cat /proc/acpi/thermal_zone/THM0/temperature

Open Source Based Penetration Testing

I did some work this weekend involving some penetration testing. I used a LiveCD called BackTrak (which is Ubuntu based). It comes loaded with a ton of tools and really makes it easy to do some pretty intensive testing. I won’t got into the details on what all I tested, but suffice it to say this will be added to my “must have” live CD collection which include:

- Knoppix – pretty much the standard in LiveCD’s

- Damn Small Linux – used for quick access to stuff while on other people’s systems

- pfsense – Built in sniffer makes this a really easy remote tool to use for grabbing traces.

- gparted live– Used for partition management

- SystemRescueCD – Great little system rescue tool

- Darik’s Boot –n- Nuke – Easily erase hard drives

- SMART – Data forensics (Used to love Helix but now you gotta pay for it. )

- CloneZilla – Disk cloning at it’s best.

How ‘bout you? Got Tools? What’s your fav?

Monday, July 20, 2009

Open Source Software in Production

I was talking to a friend on the phone today about Open Source Software. He was wondering what stuff I actually use in production. I figured I’d take a minute and document the stuff that I personally use and we as a Firm use. Here’s the list I came up with after a few minutes. I’m sure we use more…this is just the stuff I could think of:

nagios

cacti

pfsense

syslogd

phplogcon

wireshark

nmap

mediawiki

apache

php

FireFox

TrueCrypt

putty

FileZilla

FileTransfer Device

greenshot

VLC

UltraVNC

UltraVNC SC

7Zip

Audacity

NotePad++

WinSCP

Snare

Alfresco

OpenOffice

MySQL

PHPMyAdmin

OpenVPN

Password Safe

IPTables

OSSEC

Logwatch

PDFCreator

Blat!

We are pretty much standardized on CentOS as our distro of choice. I won’t get too religious on the reasons why other then to say the decision was made based upon cost, performance, security and what is built into the kernel as far as drivers go. The two commercial apps we run on CentOS are CommVault (media agents) and Kayako SupportSuite.

So there you have it.

Friday, July 17, 2009

Open Source Forensics

I used to really like the Helix package for forensic purposes.

Friday, July 10, 2009

Attention Printer Manufacturers

<Begin Rant>

You guys kill me…you really do. You’ve been in the market longer then the PC manufacturers and you still fail to look around a learn a lesson about how the market works. Printers have become one of the two evils in IT (printing and email). Here is what your customer base wants…do these things and you’ll become the market leader:

- Design a “line” of printers that all use the same damn toner cartridge. I only want to stock and buy 1 type of toner cartridge. Save your money on the manufacturing end and design a universal print cartridge that works in a family of printers and stick with the damn thing when new models come out. Ok…why is this so damn important? Here’s why

- It saves time ordering new product. We as the customer don’t have to cross reference printer models just to find out which damn toner cartridge we need to take upstairs to a printer. It also saves on those ones we ordered that were wrong and we now have to send back.

- It saves money. We don’t have to stock cartridges for each model so we need less storage space. Heck for shelf life issues alone (yes toner does have a shelf life) it would be more efficient.

- It saves the environment. As printers age, those extra cartridges that were never used get tossed. If we can just upgrade the printer to the next model and it uses standard toner we are good to go.

- You save time. Questions about which model customers should buy are gone. Less phone calls. (Nobody wants to speak to Abu in India about printers anyhow.)

- You save money. Less manufacturing costs. Think Ford’s assembly line. One car, one model, one color….

- You save more money. Less items to stock, less warehouse space needed.

- You cut out the aftermarket guy. Since all the costs come down, there’s little margin for the aftermarket guy. Starve them out by designing them out.

- Design a line of printers that all work with the same print driver. OH THE HORROR!!!! Yes. Printer drivers haven’t been reliable since HP had the HPIII. At that point all printers were made compatible with that driver and everyone was happy. Forget compatibility….unify the driver. It’s less for you to test and prove and it’s easier for the customer to load everywhere.

- Have a thin driver for each model. We could really give a shit about another systray icon eating up system resources just to tell us we are almost out of toner. Use regular logging (eventlog in Windows and syslog in Unix)…we are looking there already on a daily basis.

- Build in cost recovery and authentication features. As one of the remaining hard costs associated with printing, help us keep track of it so we can charge the right people. It helps us justify buying that bigger printer for those people that need it.

- Have the printer phone home when something dies and send us parts automatically if we have a support contract. Take a lesson from Data Domain on this one. They’ve got it right.

- Build in reporting. The web interfaces are ok, but what I want is a print detail report each month showing total pages printed and how much it changed from last month. Keep a running graph of monthly/weekly/yearly usage.

- Don’t build a tabletop printer that can’t hold at least one ream of paper. Seriously, why is this so hard?

- Use universal parts for as many components as you can in a printer family. Power supplies, paper trays, heck even fusers should be portable across a family line of printers. I’ll pay extra for a printer that I can use for spare parts later.

- Be honest about the actual life cycle of a printer. When I buy a printer I want to know how many pages it’s going to print in it’s lifetime. Figure it out and tell me. That helps me develop my ROI.

That’s it for now….but I’m sure I could think of more if I had time. Printers are nothing but a pain in the ass right now and your service stinks…all of you. Fix it already.

</End Rant>

Wednesday, July 08, 2009

VLC 1.0.0 is out

VLC is one of my favorite Open Source tools. It's a media player that comes with a ton of codecs and

Enjoy...

Wednesday, July 01, 2009

Basic pfsense to pfsense IPSEC tunnel config

Part of my security redesign this year is to replace our aging Cisco PIX boxes with pfsense. Yesterday I spent the day setting up a simulated environment for 3 of our offices over an Internet connection. I was able to get the IPSEC tunnel up and running between two pfsense boxes pretty quick. Here’s a quick and dirty process for getting it all to work:

Site 1: Outside IP: 200.200.200.201/29

Outside Gateway: 200.200.200.202

Inside IP: 192.168.1.0/24

Site 2: Outside IP: 100.100.100.100/29

Outside Gateway: 100.100.100.101

Inside IP: 192.168.2.0/24

Note: I assume everything is wired correctly and there is a router which will provide connectivity between 200.200.200.202/29 and 100.100.100.101/29. Also, if you are faking Internet addresses like I am above, be sure they aren’t in the bogon list that pfsense uses. Otherwise you’ll have to remove the bogon firewall rules on the WAN interface.

Step 1: Install pfsense and set local IP’s on both firewalls.

Step 2: Logon to the web interface for pfsense on each box and assign the WAN addresses.

Step 3: Enable IPSEC (VPN->IPSEC->Enable IPSec). Do this on both firewalls.

Step 4: Add a tunnel on Site 1’s firewall to Site 2 by adding a tunnel and changing only the following items:

* Remote Subnet: 192.168.2.0/24

* Remote Gateway: 100.100.100.100

* Phase 1 Lifetime: 28800

* PreShared Key: thisisasecretdon’ttell

* PFS Key Group: 2

* Phase 2 Lifetime: 3600

Now hit the save button

Step 5: Add a tunnel on Site 2’s firewall to Site 1 by adding a tunnel and changing only the following items:

* Remote Subnet: 192.168.1.0/24

* Remote Gateway: 200.200.200.201

* Phase 1 Lifetime: 28800

* PreShared Key: thisisasecretdon’ttell

* PFS Key Group: 2

* Phase 2 Lifetime: 3600

Now hit the save button

Step 6: Be sure to “Apply Changes” when prompted on each firewall.

NOTE: SEE COMMENTS…STEP 7 IS NOT NEEDED…

Step 7: Allow Authenticated Headers (TCP/51) and ISAKMP (UPD/500) with Firewall rules so that IPSEC can pass. Firewall->Rules: WAN Tab.

Rule 1

* Source IP: Any

* Destination IP: WAN Address

* Protocol: TCP

* Port: 51 (Other)

Hit Save

Rule 2

* Source IP: Any

* Destination IP: WAN Address

* Protocol: UDP

* Port:500 (isakmp)

Hit Save

Do this on both firewalls and Apply Changes when prompted

Step 8: Allow all traffic to pass through the IPSEC tunnel. Firewall->Rules : IPSEC Tab

Rule 1

* Source IP: Any

* Destination IP: Any

* Protocol: Any

* Port Range: Any

Hit Save

Do this on both firewalls and Apply Changes when prompted

That’s pretty much it. You should now be able to ping inside interfaces between firewall with the ping diagnostic tool. From here you can further restrict traffic with firewall rules as needed.

If something goes wrong, use the Status-> System Logs to check out what is going on both on the firewall and on the IPSec tabs. Note that any firewall denies for the IPSEC interface appear as enc0 as the interface on the Firewall tab of System Logs.

Enjoy!

Monday, June 29, 2009

mtr…the love child of ping and traceroute

Just a quick note to tell you to check out mtr if you haven’t already. It combines the features of ping and traceroute in kind of an interpreted mode that runs until you quit. There are a few command line switches as well to make life easier for automation. Here’s a generic example of it’s output going out to Google:

My traceroute [v0.75]

centos.mydomain.com (0.0.0.0) Mon Jun 29 10:10:25 2009

Keys: Help Display mode Restart statistics Order of fields quit

Packets Pings

Host Loss% Snt Last Avg Best Wrst StDev

1. 192.168.1.1 0.0% 6 0.3 0.4 0.3 0.6 0.1

2. 66.53.18.65 0.0% 6 37.7 21.2 7.4 37.7 12.8

3. ???

I’ve changed the IP addresses above to protect the innocent, but it should give you a general idea of what to expect. For years as a network engineer I used “ping –t” to watch for downed gear to come back online. This tool gives you that ability and much more as it keeps track of numerous interesting and useful statistics.

Wednesday, June 24, 2009

Filetransfer Appliance

Our Firm constantly gets requests to send large data files back and forth between clients. Up until now, we’ve been using a mixture of email attachments and a locked down FTP site.

Wednesday, June 17, 2009

Cool and Different Presentations

Having worked in the IT sector now for about 20 years I have a finely tuned bullshit monitor. This is especially true of presentations. Most hour long presentations I sit in on have 5 valuable minutes of new or interesting material. We all know “cool” when we see it and we all know what the other 55 minutes of the presentation are for….to fill time. So when I saw my first Google I/O Ignite presentation I about fell out of my chair. The presentations are 5 minutes long each with 20 PowerPoint slides. Oh yeah and they are rotated every 15 seconds so the speaker isn’t in control…he/she has to keep up. I love this format. It forces the speaker to cut the the raunchy essence of the topic and eliminate the bs. My hat is off the folks at Google on this. I’m presenting a small piece of an open source firewall talk at ILTA this year (pfsense…yeah baby!) and I may very well steal this concept for that presentation.

As an avid viewer of TED presentations, I’m always intrigued by new and different presentation styles. This presentation is done in a style that I’ve copied a few times and had much success with. It’s based on the “Lessig Method” (example here) which I find drives the listener to pay attention just to keep up. At last years ILTA, I did a presentation on Wireshark in this format and it was well received. This is my presentation style of choice if my presentation is a tutorial or training session.

What presentations styles have knocked your socks off? Hit me up in the comments and let me know. I’m all ears.

Thursday, June 11, 2009

Linux System Maintenance and Setup

There are a few things I like to do on all my Linux servers when the box is set up and from time to time to verify all is well. Here is a short list of some of these items:

- Remove rights for root to login via ssh.

In /etc/ssh/ssh_config change "PermitRootLogin yes" to "PermitRootLogin no". Then restart ssh - Boot into command line mode instead of the Gui (runlevel 3 instead of 5)

In /etc/inittab change the line

"id:5:initdefault:" to "id:3:initdefault:" - Setup logwatch to email you daily logs of what's happening each day

In /usr/share/logwatch/default.conf/logwatch.conf change

"MailTo = root" to

"MailTo = yourname@youremail.com" - Better yet...send all mail for root to your email email account.

Edit /etc/mail/aliases and change

"#root: marc" to "root: yourname@youremail.com". Now you need to run /usr/bin/newaliases to recreate the aliases.db file. - Update your box nightly at midnight...but skip kernel updates as they may break stuff

Edit your cron jobs file (crontab -e) and add the line:

0 0 * * * yum --exclude=kernel* -y update - Reboot your machine weekly (Reboot every Sunday at 1am)

Edit your cron jobs files (crontab -e) and add the line:

0 1 * * 0 /sbin/shutdown -r now - Adjust the time for forced disk checks to once a quarter because it can take a long time to boot with large drives.

Run something like

"tune2fs -c 12 -C 0 /dev/VolGroup00/LogVol00"

Assuming you reboot once a week this will force a check once a quarter. - Get a good look at the processes and what started them on your system

Run "ps auxwww" - Get a good baseline of your hard drives performance and age before you go live. Note that this wont' work on a VM and you will need check /dev/hda1 to machine your machines config.

Run "smartctl --all /dev/hda1" - Determine what ports are open and listening

Run "netstat -anp --tcp --udp | grep LISTEN"

What things to do you do?

I’m working on authoring a system maintenance document outlining things that should be done for maintenance on a daily, weekly, monthly, quarterly and annual schedule. Let me know what you are doing and I’ll email you a copy of my document when it’s done.

Friday, June 05, 2009

More on SELinux

Yesterday I posted about some issues I had with SELinux after a kernel update to CentOS. My post was commented on by Dan Walsh, a top notch security guy from Red Hat. I clicked on his name and found his blog which turned out to be a goldmine for me. In reading his blog I found a link to the best resource I’ve seen on SELinux for a system admin. It’s the Security-Enhanced Linux User Guide. I read the whole thing in about 90 minutes and it provided some insight into SELinux that I’ve found nowhere else. This is why I love Open Source and the Internet. I’m sure if I posted a note about some feature in Windows I’d never hear back from anyone in the developer community at Microsoft about how to fix my problem. Heck, I didn’t even post my note to a newsgroup…just my humble blog. It’s great to see people so involved and interested in what they do that they go looking for issues just to help people and keep up to date on what others are saying.

Thanks Dan. I truly appreciate it.

Thursday, June 04, 2009

CentOS Kernel upgrade breaks SELinux

So last night I did a yum update on one of our web servers which included a kernel update. All went well until the reboot at which time SELinux was preventing httpd from starting. Dropping SELinux into permissive mode (setenforce permissive) allowed httpd to start and things went well except for the banter of SELinux messages in my logs bitching about one thing after another. At first I thought about a system-wide relabel of the drive…but I’m truthfully a bit concerned that the hammer approach might break too many things. After some research on the web I took this approach instead:

- Grep out the line items from /var/log/messages that seem to be creating a problem. I ran something like: tail /var/log/messages | grep avc > fix1

- Use the audit2allow script to build a file of fixes that could be applied to SELinux (audit2allow –M fix1a < fix1). This creates a file called fix1a.bb.

- Run fix1a.bb against the semodule command (semodule –i fix1a.bb)

That’s it. I had to do this a few times as errors popped up, but it seems to have fixed the problem. Be sure to read through the offending lines in the messages log to verify that things that are being denied should actually be working.

Here is an example of some of the errors I was getting:

Jun 4 16:15:45 MY-WEB01 kernel: type=1400 audit(1244197545.492:6268): avc: denied { append } for pid=10103 comm="httpd" path="/var/log/httpd/access_log" dev=dm-0 ino=851362 scontext=root:system_r:httpd_t:s0-s0:c0.c1023 tcontext=system_u:object_r:file_t:s0 tclass=file

Jun 4 16:12:29 MY-WEB01 kernel: type=1400 audit(1244112349.412:6262): avc: denied { search } for pid=9737 comm="httpd" name="mysql" dev=dm-0 ino=851641 scontext=root:system_r:httpd_t:s0-s0:c0.c1023 tcontext=system_u:object_r:file_t:s0 tclass=dir

I’m still in favor of SELinux although I think I’ll be taking more precautions (like snapshoting a machine) before I update kernels. Once I got past the errors I restarted SELinux (setenforce enforcing).

Friday, May 29, 2009

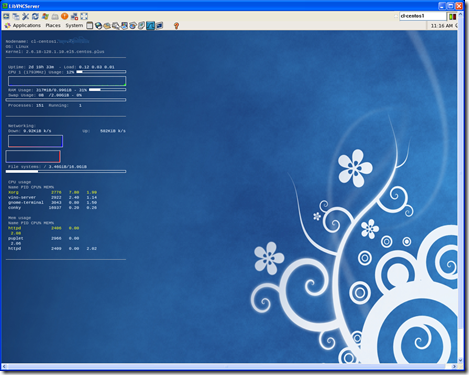

Conky is Cool

Conky is a tool used to display performance metrics of a Linux box in an active window or right on the background of the computer. If you’ve used Sysinternal’s BGInfo it’s kinda like that, but better because it updates in real time. It’s very configurable, but usually looks something like this:

That’s a bit hard to read so here is an example of the kind of data it can provide:

The configuration for this is pretty easy on Centos. Here’s how I did it:

yum install libX11-devel libXext-devel libXdamage-devel libXft-devel glib2-devel

* watch the wrap…that’s all 1 line

Then download conky here. Expand this somewhere like /usr/bin or ~ and run:

- ./configure

- make

- make install

Now you need to make a file named .conkyrc and drop it in your home directory. I used one I found online, but there are a ton of them available here with screenshots showing with they look like.

To start it under Gnome open a command line and type conky. If that bothers you that it’s spawned from the window add this script to your machine (/usr/bin/startconky.sh and chmod it to 755) and put a link to it in your panel or on your desktop.

#!/bin/sh

# by: ??

# click to start, click to stop

if /sbin/pidof conky | grep [0-9] > /dev/null

then

exec killall conky

else

sleep 1

conky

exit

fi

*Note…script blatantly stolen from here.

Now when you click on the conky icon in your panel it will start and stop conky.

Pretty cool stuff.

Friday, May 22, 2009

How I Backup MySQL

I’m documenting this mostly for my benefit, but I figured it may be of use to others. This is how I backup my mysql servers.

- Create a backup directory. I use /backup

- Verify mysqldump exists under /usr/bin

- Verify user account with rights in MYSQL to the database you want to backup. In this example I will backup a data base called bluedb. For this demo I’ll use the username “Joe” and the password “Shmoe”

- Create a script directory under /backup

- Create the backup script. Here’s mine (largely written by my friend Jason):

- Name the script something like mysqlbackup.sh and drop it in /backup/script

- Change rights on the mysqlbackup.sh so it has rights to execute (chmod 755 mysqlbackup.sh)

- Now you can test it by running: /backup/script/mysqlbackup.sh

- Next I generally edit my cron jobs (crontab –e) and add the line 0 0 * * * /backup/script/mysqlbackup.sh

#:

BACKUP_DIR=/backup

DUMP=/usr/bin/mysqldump

DATE=`date +%Y%m%d`

# DATABASE INFO

DB=bluedb

# First we will backup the structure of the database

${DUMP} --user=Joe --password=Shmoe --no-data ${DB} > ${BACKUP_DIR}/${DATE}_${DB}_backup_structure.sql

# Now we will backup the database itself

${DUMP} --user=Joe --password=Shmoe --add-drop-table ${DB} > ${BACKUP_DIR}/${DATE}_${DB}_backup.sql

# Now we will remove files that are older than 3 days

find ${BACKUP_DIR} -type f -mtime +3 -exec rm {} \;

*Note: There is some serious line wrapping going on above.

That’s it . The script dumps both the data and the structure of the database and it keeps 3 days worth of backups. From here my main backup script for the box picks these up during it’s normal daily file level backup.

Enjoy the backup goodness!!!

Wednesday, May 20, 2009

Data Domain is the Bee’s Knees

Last year when I was budgeting for 2009 I decided to take a leap and move our online backups from regular disk storage (a SATABeast) over to a compressed and deduped device. After looking at a few options (namely Exagrid and Data Domain) I chose Data Domain. Basically because the landing zone concept of Exagrid gets under my skin. So far I’ve been very impressed with the Data Domain system. It’s behaving better then I had expected. Here’s a graph of one of our sites and how well it is working:

So here we see that the raw backup (the red line) is about 15.6TB. After compression (the blue line) the data takes up about 10.6TB. After deduplication (the green line) it’s only 2.8TB. As I’m replicating this across the country it sure makes life better. So yes, I love my Data Domain system.

Tuesday, May 19, 2009

Doing more with less

If you are like me, a Windows convert, you are probably popping open nano, emacs or some other command line editor a lot as you are looking through configuration files. Yeah I can use vi when I have to but I’m happier and more productive in a full screen text editor. Anyhoo, I’ve used the “more” command in Linux and Windows for quite a long time and I like it for quick checks, but now that I’ve been playing with Linux a lot I’ve been seen how much more powerful the “less” command is.

To see the contents of a file just type “less filename.txt”. It automatically displays the file on the screen and magically the page up, page down, home and end keys all work for navigation. Even better, you can run a simple search by proceeding the search term with a forward slash. For example, to search a file for the word “apple” while in a less session just type “/apple”. Cool. You even get the benefit of text highlighting to make the search more effective. (If you hate the highlighting just hit Esc-u to turn it off.) Once you are in a search, the letter n takes you to the next found word and a capital N takes you to the previous found word. Pretty cool. To exit a less session just type q for quit. Of course the beauty of all this is that you aren’t actually editing the file so you can do no harm.

To see what line you are on or how far you are reading into a file start the program with the –M switch (less –M filename.txt) Here are some other cool tricks (from a shameless cut and paste):

- Quit at end-of-file

- To make less automatically quit as soon as it reaches the end of the file (so you don't have to hit "q"), set the -E option.

- Verbose prompt

- To see a more verbose prompt, set the -m or -M option. You can also design your own prompt; see the man page for details.

- Clear the whole screen

- To make less clear and repaint the screen rather than scrolling when you move to a new page of text, set the -C option.

- Case-less searches

- To treat upper-case and lower-case letters the same in searches, set the -I option.

- Start at a specific place in the file

- To start at a specific line number, say line 150, use "

less +150 filename". To start where a specific pattern first appears, use "less +/pattern filename". To start at the end of the file, use "less +G filename". - Scan all instances of a pattern in a set of files

- To search multiple files, use "

/*pattern" instead of just "/pattern". To do this from the command line, use "less '+/*pattern' ...". Note that you may need to quote the "+/*pattern" argument to prevent your shell from interpreting the "*". - Watch a growing file

- Use the F command to go to the end of the file and keep displaying more text as the file grows. You can do this from the command line by using "

less +F ...". - Change keys

- The

lesskeyprogram lets you change the meaning of any key or sequence of keys. See the lesskey man page for details. - Save your favorite options

- If you want certain options to be in effect whenever you run less, without needing to type them in every time, just set your "

LESS" environment variable to the options you want. (If you don't know how to set an environment variable, consult the documentation for your system or your shell.) For example, if yourLESSenvironment variable is set to "-IE", every time you run less it will do case-less searches and quit at end-of-file.

Happy viewing!!!

Friday, May 15, 2009

Diving into Wiki’s

A recent project popped up at the Firm in regard to the storage and retrieval of unstructured, uncategorized data. The request was to have a piece of software help to keep track of websites, contact information, client history and government programs related to a specific area of law. After looking a numerous products including mind mapping tools, data tree tools, $harePoint and databases I picked a wiki as the best method to use to get started. After reading and reviewing what was out there, I decided to try both phpwiki and MediaWiki.

The entire MediaWiki install took roughly 15 minutes. I won’t blog about the process because it’s already in plain English here. What I really liked about MediaWiki was (1) the fact that it’s what Wikipedia is hosted on (2) there are a ton of already developed plug-ins (3) the documentation is really good and abundant and (4) any idiot can edit it as proven by Wikipedia (shout out to Jerry on phrasing that point so eloquently!).

So far so good. The basic product is pretty simple to use although the Wiki editing requires some inline html/Wordstar-like editing skills. I’ll let you know how it goes but so far it’s been pretty cool.

Tuesday, May 12, 2009

OSSEC Active Responses

So I’ve been playing around a lot with OSSEC. Active responses are one of my favorite features. They remind me of the old firewall days when countermeasures

Here is how they work…

The ossec.conf file lists both commands and active-responses. I’m going to describe how the firewall-drop active-response detects and drops attempts to login too many times to your server. The command configuration is shown here:

<command>

<name>firewall-drop</name>

<executable>firewall-drop.sh</executable>

<expect>srcip</expect>

<timeout_allowed>yes</timeout_allowed>

</command>

Now this is stock code included with OSSEC so you can get this working in minutes. Notice that the file structure is XML. That not only makes it easy to find and understand the code but it makes it simple to extend with any text editor as well.

Here we see the declaration of a command firewall-drop. It’s tied to a shell script called firewall-drop.sh (which lives at /var/ossec/active-response/bin on my server). This script and pretty much all scripts return (expect) a number of variables for you to use. The first is the action (add or delete) which is used to tell the script to add a firewall rule or delete a firewall rule. The second variable is the username (user) which isn’t actually used in this specific script. The third variable is the source ip address (srcip) which in our case is the ip of the guy trying to login unsuccessfully. The timeout_allowed goes hand in hand with the action variable. A timeout lets you put a rule in for say…600 seconds and then remove it again. This will thwart attackers automated attacks while not completely locking out the forgetful or fat-fingered admin.

The active response configuration in the ossec.conf file looks like this:

<active-response>

<!-- Firewall Drop response. Block the IP for

- 600 seconds on the firewall (iptables,

- ipfilter, etc).

-->

<command>firewall-drop</command>

<location>local</location>

<level>6</level>

<timeout>600</timeout>

</active-response>

The first part in between the <!—and –!> is just a descriptive comment. The command matches up with the command defined above. The location tells the script where to run the rule. In this case, this is a local installation of OSSEC so it’s set to local. The level is the trigger which kicks off this event. There are a set of predefined rules (in /var/ossec/rules) which categorize different detected events. One of these triggers is the detection of a brute force ssh password attack. There are numerous levels so I can’t go into all them here but you can easily drop in a look at the rules files on a standard installation for more info. Additionally, you can trigger on multiple types of things not just levels. There is a predefined rules group name called “sshd” so I could have used something like that as well. Unfortunately, the sshd rules group fires for successful and unsuccessful attempts so I couldn’t use that in this example.

Once things are configured a simple restart of the ossec system (/var/ossec/bin/ossec-control restart) will put it all into action.

So here is how it works. Someone tries to brute force attack the server on ssh and logs are generated in the secure log. OSSEC watches those logs, uses the rules file to detect the attack and sets the rule level. The the rule level fires off the active response script firewall-drop.sh which adds a rule to the iptables config effectively blocking the source ip address. After 600 seconds, the script is called again with the “delete” action and the rule is removed and all is well.

OSSEC is very powerful and this example just scrapes the surface. This has rapidly become one of my favorite open source tools of all time.

Thursday, May 07, 2009

Building a BDD/Microsoft Deployment USB Boot drive from XP

In my earlier post about using Microsoft Deployment off a USB drive I gave instructions for using the Diskpart tool to format,  partition and set the USB drive as active. Turns out that only works under Vista. Under XP, when you do a “list disk” in diskpart it can’t even see the drive. So…as a work around here is what we did. First off I’m using a SAN cruzer 8GB stick but they are all about the same. First I uninstall the U3 autoloading nonsense. This will end up reformatting the drive which is just fine. The I use a copy of bootsect.exe from the WAIK kit (installed under c:\Program Files\Windows AIK\Tools\PETools\x86) and I run:

partition and set the USB drive as active. Turns out that only works under Vista. Under XP, when you do a “list disk” in diskpart it can’t even see the drive. So…as a work around here is what we did. First off I’m using a SAN cruzer 8GB stick but they are all about the same. First I uninstall the U3 autoloading nonsense. This will end up reformatting the drive which is just fine. The I use a copy of bootsect.exe from the WAIK kit (installed under c:\Program Files\Windows AIK\Tools\PETools\x86) and I run:

bootsect /nt60 f:

Then I copy the contents of the .iso created from the Microsoft Deployment point (the media one) onto the drive. From here it boots and all is well.

Tuesday, May 05, 2009

SSH Protection in IPTables

So now that we are off of Windoze IIS as our main production web server and onto Linux, I’ve been watching the logs very closely to verify all is well. I’ve got OSSEC, Logwatch and a few “custom” scripts installed for this purpose.

iptables -I INPUT -p tcp --dport 22 -i eth0 -m state --state NEW -m recent --set

iptables -I INPUT -p tcp --dport 22 -i eth0 -m state --state NEW -m recent --update --seconds 60 --hitcount 4 -j DROP

Essentially, a timer is kicked off at each attempt to login. On the forth attempt, the IP is banned until the first attempt times out at over 60 seconds. If someone runs a script against the server the first 3 will be denied because of incorrect passwords and the rest will be banned because of repeated attempts. Since the timer resets with each attempt they can keep sending user/pass combos but until they back off for 60 seconds they will just be denied. Not a perfect solution, but one to certainly stop the madness I’m currently seeing. After we get out of developer mode I’ll probably increase it to 10 minutes.

I also looked at two scripts fail2ban and one called protect-ssh.

Enjoy.

Friday, May 01, 2009

GMER Rootkit detection tool

I ran across this cool little free tool for Windows based root kit detection today. There are actually two tools on the site, catchme.exe and gmer.exe. Catchme.exe seems to be a command line tool for root kit detection and gmer.exe (whose name changes on each download to thwart malware from detecting it on the way down) is a gui app. As I’ve never had a root kit infection I can’t comment on how well they work but they look like pretty good tools and they are recommend in the book on OSSEC so that can’t be all that bad. :)

Enjoy!

Wednesday, April 29, 2009

OSSEC rocks my socks

OSSEC is a security tool which can be best classified as a host intrusion detection system. It can be installed in three modes: local, agent or server. I installed it as a local install on my test box so that I could see how it worked. What a cool app!

I’ve been hitting the research hard looking for log analysis tools for apache and linux and this tool is going to be very useful. This may even remove the need for my centralized syslog box as it parses out the good info from the bad. We will see…. I haven’t dropped this on my production web server yet, but I will soon.

Two thumbs up for this useful tool…

Oh yeah…there is a agent for Windoze boxes as well. ;)

Tuesday, April 28, 2009

Show all users cron jobs

Just a quick one today…it’s been a busy busy few days. :) Here’s a quick way (when logged in as root) to see all the cron jobs configured on a system:

for user in $(cut -f1 -d: /etc/passwd); do crontab -u $user -l; done

Watch the line wrap and enjoy!

Tuesday, April 21, 2009

Housecleaning Linux Style

It’s been quite a busy few days here at the Firm. We’ve gone live with a new website (hosted on Linux of course), exchanged a ton of electronic files with one of our vendors ala TrueCrypt and finished off our monthly reboots (thanks Microsoft). So the web server was probably the most fun. During the process I enabled Google Analytics, awstats, logwatch, selinux and iptables. Google Analytics is great for getting the quick on dirty stats for your web server. This is the first time I’ve enabled it site wide and it has really been eye opening. Do yourself a favor and use it on your site to track where people are going. I even enabled it on my blog and it seems my posts on Equallogic and HP Procurve are my top hits.

Awstats is a perl script the parses your Apache access_log and reports back on your web site statistics in a meaningful way. (It can do other apps like ftp as well, but I’m only using it for httpd.) Lots of great info here but it takes a bit more tuning to get running they way you want it. I’m still tweaking the output but I hope to get it all done soon.

Logwatch is a tool built in to most distros that parses your log files and rolls up some really basic, but important statistics which it then optionally emails to you. You are probably running this tool already and don’t even know it. Do yourself a favor and edit your logwatch.conf file (mine is under /usr/share/logwatch/default.conf) and add your email address to the “MailTo =” line. As this is a web server I’m interested in who is logging in remotely and logwatch details out SSHD login info :

sshd:

Authentication Failures:

root (192.168.31.111): 216 Time(s)

unknown (192.168.51.11): 48 Time(s)

Invalid Users:

Unknown Account: 48 Time(s)

Sessions Opened:

root: 27 Time(s)

Failed logins from:

xx.xxxx.xx.xxx: 216 times

root/password: 216 times

Illegal users from:

xx.xxx.xx.xxx: 48 times

oracle/password: 48 times

Users logging in through sshd:

root:

xx.xxx.xx.xx(aserver.ontthenet.com): 21 times

xx.xx.xx.xxx(anotherserver.onthenet.com): 3 times

xx.xx.xx.xx(ahost.outtheresomewhere.com): 2 times

xx.xx.xxx.xxx(anotherhost.outtheresomewhere.com): 1 time

Received disconnect:

11: Bye Bye

xx.xxx.xx.xxx : 264 Time(s)

SFTP subsystem requests: 21 Time(s)

Logwatch will summarize much more then just the sshd logs, but I wanted to give you a sample of it’s power.

SELinux is of course an application layer firewall. It will keep services/daemons contained in their own “space” so that they all get along. Should something get hacked, SELinux will keep the hacker from accessing data beyond what the compromised service has rights to. It’s a little hard to get your arms around, but it’s well worth the time invested learning how it works.

iptables is a very flexible and useful firewall. One thing that I learned how to do was to restrict the number of login attempts so that hackers will be blacklisted by IP address if they try and run something like rainbow tables against your sever. Here’s the quick and dirty :

iptables -A INPUT -i eth0 -p tcp --dport 22 -m state --state NEW -m

recent --set --name SSH

iptables -A INPUT -i eth0 -p tcp --dport 22 -m state --state NEW -m

recent --update --seconds 60 --hitcount 8 --rttl --name SSH -j DROP

This lines are wrapped here, but you can use that as a head start. Lots and lots of buttons and knobs to turn that’s for sure.

Enjoy.

Tuesday, April 14, 2009

NTOP on CentOS 5.3 for Netflow Monitoring

I did an NTOP install on CentOS 5.3 today and it was a little different then I’ve done before.

- Install a repository that has the ntop package available: “rpm –Uhv http://apt.sw.be/redhat/el5/en/i386/rpmforge/RPMS/rpmforge-release-0.3.6-1.el5.rf.i386.rpm”

- Install ntop “yum install ntop”

- Run ntop “ntop”

- When asked, you’ll need to supply a password for the default admin account. It get’s a little lost in the start up noise but if you scroll back you should see the request.

- run “service ntop start”

- run “chkconfig ntop on”

- Now it should be up and running and should restart at the next reboot as well.

- Allow ports 3000/tcp and 2055/udp if you have firewalling enabled. Port 3000 is for ntop and port 2055/ubp is for netflow.

- From another PC web browse http://yourserverip:3000

- Now, enable Netflow: From the menus select Plugins, Netflow, Enable

- Now make sure you are monitoring on the correct interface. Admin, Switch NIC. For me the interface was NetFlow-device.2 [id=1].

- Now set your Netflow defaults. Plugins, Netflow, View/Configure.

- Select the device you want, Hit the Edit Netflow Device button.

- I left the name alone as NetFlow-device.2

- Change local collector udp port to 2055 (the default port).

- Hit the Set Port button

- Virtual Netflow Interface is the interface on the router (indicated as the flowexport source interface below) that will be sending you the netflow stream of data. Also put it’s mask. (For me this was 192.168.1.1/255.255.255.0)

- Hit the Set Interface Address button

- Aggregation – none (This was my preference)

- Hit the Set Aggregation Button

- The only other thing I changed was debug. I turned it off.

- Hit the Set Debug button

So the next step was to configure our router to point to the ntop box.

- Login to privileged mode on the router

- (config)#ip flowexport source <interface number>

- (config)#ip flowexport version 5 peeras

- (config)#ip flowexport destination<ip address> <port number>

- (config)#ip flowcache timeout active 1

- Not change to the interface you want to monitor

- (config-if)# interface <interface>

- (config-if)# ip routecache flow

- (config-if)# bandwidth 1544

- (config-if)# wr

In the above example I used a few abbreviations: <interface number> is the local interface you want to use to send the data from (faste 0/0 for me). It’s NOT the one you want to monitor. <ip address> and <port number> are the ip address and the port that ntop will be listening on. The default port is 2055 udp. The bandwith of 1544 which is the speed of a T1 line. You’ll have to adjust that for your line speed.

Ok pop back to the web interface and look at the Netflow Statistics. (Plugins, Netflow, Statistics). You should eventually see the Packets Received number start to grow. If it’s growing you’re getting data. Now you should be able to browse through the menus and start to look at your data. It takes awhile for the data to build and be meaningful but it’s pretty cool.

Drop me a comment if you are interested and I’ll run through what some of the data means.